“Volunteers have built a wireless Internet around Jalalabad, Afghanistan, from off-the-shelf electronics and ordinary materials.” Source of caption and photo: online version of the NYT article quoted and cited below.

“Volunteers have built a wireless Internet around Jalalabad, Afghanistan, from off-the-shelf electronics and ordinary materials.” Source of caption and photo: online version of the NYT article quoted and cited below.

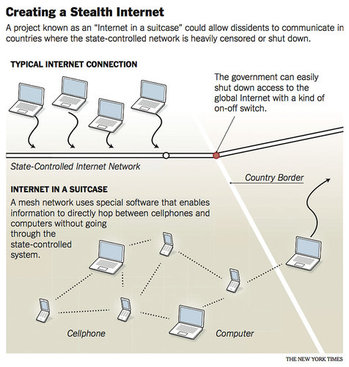

The main point of the passages quoted below is to illustrate how, with the right technology, we can dance around tyrants in order to enable human freedom.

(But as a minor aside, note in the large, top-of-front-page photo above, that Apple once again is visibly the instrument of human betterment—somewhere, before turning to his next challenge, one imagines a fleeting smile on the face of entrepreneur Steve Jobs.)

(p. 1) The Obama administration is leading a global effort to deploy “shadow” Internet and mobile phone systems that dissidents can use to undermine repressive governments that seek to silence them by censoring or shutting down telecommunications networks.

The effort includes secretive projects to create independent cellphone networks inside foreign countries, as well as one operation out of a spy novel in a fifth-floor shop on L Street in Washington, where a group of young entrepreneurs who look as if they could be in a garage band are fitting deceptively innocent-looking hardware into a prototype “Internet in a suitcase.”

Financed with a $2 million State Department grant, the suitcase could be secreted across a border and quickly set up to allow wireless communication over a wide area with a link to the global Internet.

For the full story, see:

JAMES GLANZ and JOHN MARKOFF. “U.S. Underwrites Internet Detour Around Censors.” The New York Times, First Section (Sun., June 12, 2011): 1 & 8.

Source of graphic: online version of the NYT article quoted and cited above.